Some general stuff I learned about data packets sent over the internet:

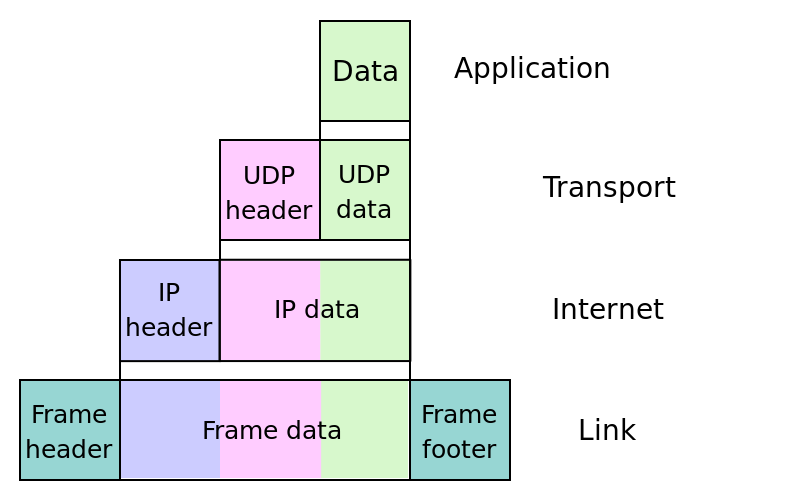

Packets have a header and a payload (or body), and a trailer (which I understand really just indicates the end of the packet). I found this nice definition that explains the header includes 20 bytes (usually) of metadata about the payload including things like protocols governing the format of the payload. HTTP headers can have many, many potential fields! Request and Response packets also include different fields. When you use HTTPS, both the header and payload are encrypted.

Using Herbivore

Herbivore is very user-friendly software developed by Jen & Surya that shows HTTP traffic on the network you’re connected to. It does not show devices that are sleeping and only shows HTTP traffic. Using Herbivore, I found that HTTP/S packets are requested when you close a tab in your browser, or when you click a tab thats been dormant. I also found my computer was sending data to sites like http://www.trueactivist.com (fake news looking site?) and pbs.twimg.com (link to w3 snoop).

Some interesting packet header fields that were returned:

- P3P: Apparently, a field for P3P policy to be set, but this was never fully implemented in most browsers. Now, websites set this field in order to trick browsers into allowing 3rd party cookies.

- upgrade-insecure-requests: Tells the server that hosts mixed content that the client would like to use HTTPS.

- access-control-allow-origin: One of many “access control” settings that indicate a site allows cross-origin-resource-sharing. I remember this being a sticking point when we built APIs in another class.

- e-tag: ID for a version of a resource.

- cache-control: specifies directives “that must be obeyed by all caching mechanisms along the request-response chain.” {wiki} Values I saw included: public, no-check, max-age=.

- connection: control options for the current connection. I found values of: close, keep-alive.

- vary: “Tells downstream proxies how to match future request headers to decide whether the cached response can be used rather than requesting a fresh one from the origin server.” {wiki}

- x-xss-protection: cross-site scripting filter.

- surrogate-key: Some header that helps Fastly purge certain URLs.

- edge-cache-tag: Some header that helps Akamai customers purge cached content.

- CF-RAY: Helps trace requests through CloudFlare‘s network.

I guess http-header naming conventions changed in 2012, and headers that begin with X- should no longer be used. Nonetheless, I found several:

- x-HW

- x-cache

- x-type

- x-content-type-options

- X-Host

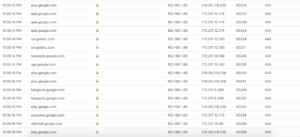

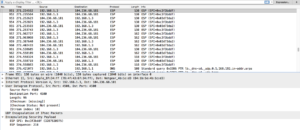

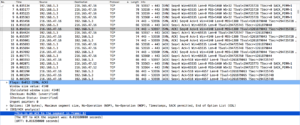

I did some tests, too. I found there was a lot of traffic between my computer and different google services when I signed into gmail:

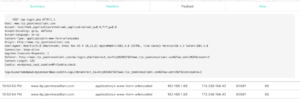

And I replicated the wordpress username & password problem we noticed in class.

I found that by forcing https, by including it in the address bar, you could circumvent this problem.

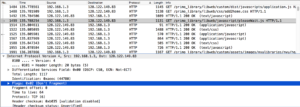

Using WireShark

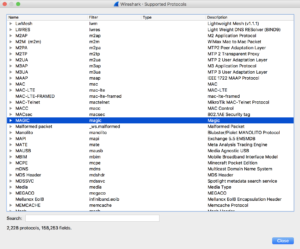

Looking at HTTP traffic using Herbivore was really interesting and fun. But I was left with questions about the other protocols my computer was using in order for me to use the internet. I wondered what other kind of traffic was observable and what kind of metadata would be available on the protocols I’ve been taught are secure. Can you see SSH? VPN? Email? I knew from an accidental experiment using Herbivore that you could not see web traffic when using a VPN. But would you be able to see something using Wireshark?

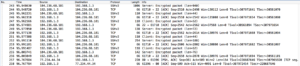

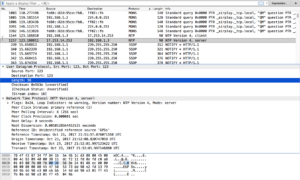

To figure out Wireshark, I just used my computer while navigating to the NYU Libraries site and captured all traffic. This is what I think I learned.

The Internet Protocol Suite wiki page helped me understand the Wireshark output and its references to frames https://en.wikipedia.org/wiki/Internet_protocol_suite

- My computer is talking to my router (using DNS) and my router is responding (using DNS). I think my router is figuring out where to send the request I made.

- It looks like my router is also doing some other Multicast stuff. I don’t know a lot about multicast but when I looked up these protocols my router was using (ICMPv6, IGMPv2, and SSFP) they all seemed to some way to discover devices, or “establish a multicast group membership.”

- Usually it was my router using these protocols with these weird IP addresses, but sometimes it was actually my computer. In these cases “M-SEARCH” is indicated instead of “NOTIFY.” I don’t know what this means.

- My computer is also talking to the website I’m trying to reach via HTTP.

- There is also a bunch of TCP traffic between my computer and the site at NYU I was connecting to.

- NTP is used for clock synchronization (application layer). You can see it’s UDP port 23. This was cool to find.

- You could also see all the Transport Layer Security handshaking. I’m guessing it’s okay that you can see this session ticket. It also tells you if your session is reusing “previously negotiated keys,” or is resuming a session.

To my questions:

- Can you see SSH? Yes, and the traffic looks the same as SFTP.

- VPN? Yes: while connected, traffic appears as an “Encapsulated Security Payload” (ESP)

- Email? I’m not sure–I used Gmail which probably uses some other protocol besdies SMTP to send email. SMPT is a Wireshark-supported software and it did not appear. But there was traffic generated that could be my email being sent and gmail updating the page.

More questions

I’m really curious to interface Wireshark with an SDR to see other kinds of signals! I began down this path using this RTL-SDR tutorial, but ended up stuck on two fronts. I was using the VMWare installation they suggested, but the Ubuntu machine would not detect the SDRs connected to it. In trying to detect GSM traffic on a device where they were detectable, it was not clear a signal would be detectable. I couldn’t even find my own cell signals. This is annoying and requires more investigating, but is luckily out of the scope of this week’s assignment.